AI-driven voice-over and dubbing have transformed the global content production pipeline. What once required recording studios, voice actors, and complex post-production workflows can now be done in minutes with synthetic speech technology.

However, one misunderstanding persists: many assume that dubbing is simply “subtitles with audio.”

In reality, AI voice-over/dubbing and subtitle creation are fundamentally different disciplines—and the way you prepare scripts, adjust timing, and manage quality is completely different.

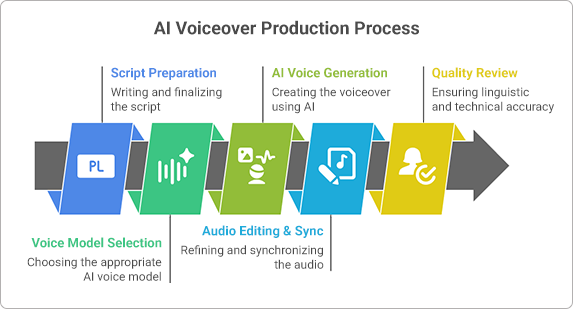

Most AI video localization platforms today follow a similar pattern:

- extract a transcript or subtitle file from the source video,

- machine-translate it,

- run human post-editing on the translated text, and then

- feed that text into a text-to-speech engine to generate target-language audio.

When the translated subtitle text is pushed straight into TTS like this—without being redesigned as spoken dialogue—the results are often stiff or unnatural, especially between distant language pairs such as English↔Korean or English↔Japanese.

This article outlines the best practices for high-quality AI voice-over/dubbing and clearly explains how it differs from traditional subtitle work.

How AI Voice-Over/Dubbing Differs From Subtitle Work

1. Subtitles are for reading. Dubbing is for listening.

Subtitles deliver written information on screen.

Dubbing delivers spoken language that must sound natural.

Because of this difference:

- Subtitle scripts tend to be concise and text-focused.

- Voice-over scripts must sound like real speech—clear, rhythmic, and expressive.

For example, a subtitle might say:

“Select your language in the settings menu.”

A natural dubbing line could be:

“Now go into the settings menu and choose your language.”

Both lines convey the same message, but the second one is written to be spoken, not read.

A subtitle-ready sentence often cannot be used for dubbing without this kind of rewrite.

2. Timing works differently

Subtitles only need to match on-screen timing (in/out timestamps).

AI dubbing must match:

- Speech duration

- Pauses and rhythm

- On-screen lip movement (if the speaker is visible)

- Emotional pace

This is why AI dubbing requires:

- Careful punctuation control

- Break tags or explicit pause markers

- Basic prosody adjustments (where to pause, where to stress words)

These steps simply don’t exist in a typical subtitle workflow.

3. Translation tone is completely different

Subtitle translation prioritizes:

- Brevity

- Readability

- Limited characters per line

- Viewer’s visual workload

Dubbing translation prioritizes:

- Natural spoken language

- Emotional tone

- Conversational pacing

A literal subtitle translation may sound robotic or unnatural when spoken aloud — exactly what often happens when MT-edited subtitle text is pushed straight into a TTS engine. Dubbing requires a spoken-language approach, not a text-based one.

4. Dubbing must reproduce emotion, intonation, and dynamics

Subtitles convey emotion through punctuation or brackets (e.g., “[sighs]”).

AI dubbing must sound emotional and natural.

This means:

- Punctuation drives tonal shifts

- Pauses are intentionally placed

- Stress and emphasis must be added

- Synthetic voices must capture mood

The emotional fidelity required for dubbing is significantly higher than for subtitles.

5. Pronunciation matters far more in dubbing

Subtitles don’t care how words sound.

AI dubbing must say everything correctly, including:

- Brand names

- Medical or technical terms

- Acronyms

- Foreign words

- Product model numbers

This requires:

- Aliases (custom spellings)

- Phonetic instructions

- Custom pronunciation dictionaries

These pronunciation issues become even more pronounced between distant language pairs (for example, English↔Korean or English↔Japanese), where brand names and technical terms often don’t follow predictable phonetic rules.

6. Quality control (QC) criteria are more demanding

Subtitle QC checks:

- Timing

- Line breaks

- Typos

- Readability

AI voice-over QC requires a full audio and video review:

- Pronunciation accuracy

- Natural prosody

- Correct rhythm & pacing

- Emotional authenticity

- Lip-sync (for full dubbing)

- Audio clarity and normalization

Dubbing QC is closer to audio engineering than text editing.

Best Practices for High-Quality AI Voice-Over and Dubbing

1. Write scripts for speech, not reading

- Use short, natural sentences

- Avoid long clauses and heavy text

- Rewrite subtitles into spoken language

2. Use punctuation to control delivery

- Commas = short pauses

- Periods = long pauses

- Question marks = rising tone

- Exclamation marks = emphasis

- Ellipses = hesitation or emotion

3. Insert Break Tags for precise sync

Customize pauses when punctuation is not enough.

4. Rewrite numbers and units for clarity

AI often misreads dates, measurements, and model numbers.

5. Use aliases and phonetics for tricky words

Ensure accurate pronunciation of brand names, technical terms, and foreign words.

6. Align audio carefully with the visuals

- Match lip movement when the speaker is visible

- Align emotional tone with the scene

- Keep pace with original timing

7. Maintain style consistency across the project

- Same voice settings

- Same pronunciation rules

- Same pace and tone

8. Always perform full audio QC

Check pacing, pronunciation, emotion, timing, noise, and waveform alignment.

9. Assign a human “script owner” for each language

AI tools can generate voices, but they can’t judge nuance and context.

Give one linguist or localization partner clear ownership of the script in each language so they can:

- Adapt subtitle text into natural spoken dialogue

- Set pronunciation rules for brand and product names

- Approve final timing and emotional delivery before publishing

Conclusion: Subtitles and AI Dubbing Are Not the Same

Subtitles are fundamentally text localization, while AI voice-over and dubbing are audio performance engineering. The two tasks share similarities in translation, but the execution, timing, emotional delivery, and QC requirements are entirely different.

When handled properly, AI voice-over and dubbing offer:

- Faster production

- Lower cost

- Global scalability

- Consistent voice quality

- Natural, immersive multilingual audio

They are not “one-click localization,” though. The best results come from combining AI technology with human expertise in scripting, timing, pronunciation, and quality control — especially for complex language pairs and enterprise training or marketing content.